I conducted a paid survey via AYTM on the topic of sleeping. I was interested in finding out what variables (psychological, lifestyle, circumstance) have a noticeable effect on sleep related issues such as nightmares, sleep duration, time needed to fall asleep, etc … Now that I’ve got the raw data result, there’s a ton of relationships to analyze and that will take time. But I’ve already found a few neat statistically significant results, some to be expected, some rather surprising. I’ll publish them, as well as the results yet to be found, here on my blog in the coming weeks.

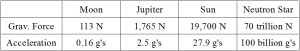

Geographic Region: US

Number of Respondents: 250

Males: 96 (38.4 %)

Females: 154 (61.6 %)

Minimum Age: 18

Median Age: 36

Maximum Age: 81

White-American: 163 (65.2 %)

African-American: 23 (9.2 %)

Asian-American: 17 (6.8 %)

Hispanic-American: 28 (11.2 %)

Other: 19 (7.6 %)

Here’s what I extracted from the data so far. Statistical significance was determined via a two-population Z-test. Notice the p-value. You can interpret it as the chance that the result came to be by random fluctuations rather than via a real effect. Hence, the lower the p-value, the more significant and reliable the result. A p-value of 0.05 roughly means that there’s a 1 in 20 chance that the result is just a random fluctuation, a value of 0.01 that there’s a 1 in 100 chance for the same. All of the results below are significant at p < 0.05, some even at p < 0.01.

By the way: if you’ve got your own data, you can let this great website do a two-population Z-test for you. Only works though if you’ve got the result in form of a percentage. To learn more about hypothesis testing, including how to perform a Z-test if the data is not given in form of a percentage, check out this great book by Leonard Gaston.

——————————————————————————

AGE:

——————————————————————————

————————–

Hypothesis: Young people have more nightmares

————————–

Percentage of people with nightmares for people at median age or younger: 49.6 %

Number of respondents: 127

Percentage of people with nightmares for people older than median age: 26.8 %

Number of respondents: 123

The Z-Score is 3.706. The p-value is 0.0001. The result is significant at p < 0.01.

Correlation between age and probability for nightmares: Probability = 0.757 – 0.00958·Age

(Every year the chance for nightmares goes down by roughly 1 %)

————————–

Hypothesis: Young people are more light sensitive

————————–

Percentage of light sensitive people for people at median age or younger: 62.2 %

Number of respondents: 127

Percentage of light sensitive people for people older than median age: 49.6 %

Number of respondents: 123

The Z-Score is 2.0065. The p-value is 0.02222. The result is significant at p < 0.05.

Correlation between age and probability for light sensitivity: Probability = 0.756 – 0.00504·Age

(Every two years the chance for light sensitivity goes down by 1 %)

————————–

Hypothesis: Older people more frequently take naps

————————–

Percentage of people taking naps for people at median age or younger: 23.6 %

Number of respondents: 127

Percentage of people taking naps for people older than median age: 33.3 %

Number of respondents: 123

The Z-Score is 1.7009. The p-value is 0.04457. The result is significant at p < 0.05.

Correlation between age and probability for taking naps: Probability = 0.194 + 0.00232·Age

(Every four years the chance for taking naps goes up by 1 %)

——————————————————————————

GENDER:

——————————————————————————

————————–

Hypothesis: Females wake up more frequently at night

————————–

Percentage of males who frequently wake up at night: 51.1 %

N = 96

Percentage of females who frequently wake up at night: 64.3 %

N = 154

The Z-Score is 2.081. The p-value is 0.03752. The result is significant at p < 0.05.

————————–

Hypothesis: Males have a higher peak waking duration

————————–

Peak waking duration for males: 40.6 h

SEM: 1.76 h

Peak waking duration for females: 36.6 h

SEM: 1.33 h

The Z-Score is 1.8132. The p-value is 0.0349. The result is significant at p < 0.05.

——————————————————————————

INCOME:

——————————————————————————

————————–

Hypothesis: People with low income daydream more

————————–

Percentage of people with low income who frequently daydream: 40.1 %

N = 142

Percentage of people with high income who frequently daydream: 26.9 %

N = 108

The Z-Score is 2.1764. The p-value is 0.01463. The result is significant at p < 0.05.

————————–

Hypothesis: People with low income take longer to fall asleep

————————–

Time to fall asleep for people with low income: 31.4 min

SEM = 1.44 min

Time to fall asleep for people with high income: 22.9 min

SEM = 1.08 min

The Z-Score is 4.7222. The p-value is < 0.00001. The result is significant at p < 0.01.

——————————————————————————

DEPRESSION:

——————————————————————————

————————–

Hypothesis: Depressed people have more nightmares

————————–

Percentage of people with nightmares for depressed people: 57.1 %

Number of respondents: 84

Percentage of people with nightmares for non-depressed people: 28.9 %

Number of respondents: 166

The Z-Score is 4.3308. The p-value is 0. The result is significant at p < 0.01.

————————–

Hypothesis: Depressed people daydream more

————————–

Percentage of depressed people who frequently daydream: 50.3 %

N = 84

Percentage of non-depressed people who frequently daydream: 26.5 %

N = 166

The Z-Score is 3.7392. The p-value is 9E-05. The result is significant at p < 0.01.

————————–

Hypothesis: Depressed people need more time to feel fully awake after a good night’s sleep

————————–

Time to feel fully awake for depressed people: 42.5 min

SEM: 2.70 min

Time to feel fully awake for non-depressed people: 28.9 min

SEM: 1.27 min

The Z-Score is 4.5580. The p-value is < 0.00001. The result is significant at p < 0.01.

——————————————————————————

OTHERS:

——————————————————————————

————————–

Hypothesis: People who need more time to fall asleep also need more time to feel fully awake after a good night’s sleep

————————–

Time to feel fully awake for people who need less than 30 minutes to fall asleep: 29.9 min

SEM: 1.77 min

Time to feel fully awake for people who need 30 minutes or more to fall asleep: 37.1 min

SEM: 1.92 min

The Z-Score is 2.7572. The p-value is 0.002915. The result is significant at p < 0.01.

Correlation between time falling asleep (FAS) versus time to feel fully awake (FAW):

FAW = 24.7 + 0.317*FAS

(Every ten minutes additional time needed to fall asleep translate into roughly three minutes additional time required to feel fully awake after a good night’s sleep)